Key Takeaways

- Ignoring high volumes of low-priority alerts can carry high risk.

Low true-positive rates still matter when alert volumes are high; missed incidents can escalate into costly breaches. - AI can expand alert coverage without increasing headcount. By automating triage and surfacing “long-tail” threats, AI helps teams investigate more alerts intelligently and efficiently.

- Risk-based modeling helps quantify the value of broader coverage. Using conservative assumptions, teams can estimate savings up to $500K annually by reducing the chances of missed high-impact threats.

Introduction

Security teams don’t ignore alerts because they want to; they do it because capacity is limited. High alert volumes force trade-offs, and many lower-confidence or noisy alerts go uninvestigated or are only given a cursory review. While most are certainly benign, some may contain real threats that slip through unnoticed. In this article, you will learn how to model the potential cost of missed alerts, understand where AI fits into expanding coverage, and see how small changes in triage scope can have a measurable impact. The goal is to make smarter decisions about what gets reviewed and why.

A Realistic Model of Missed Alerts

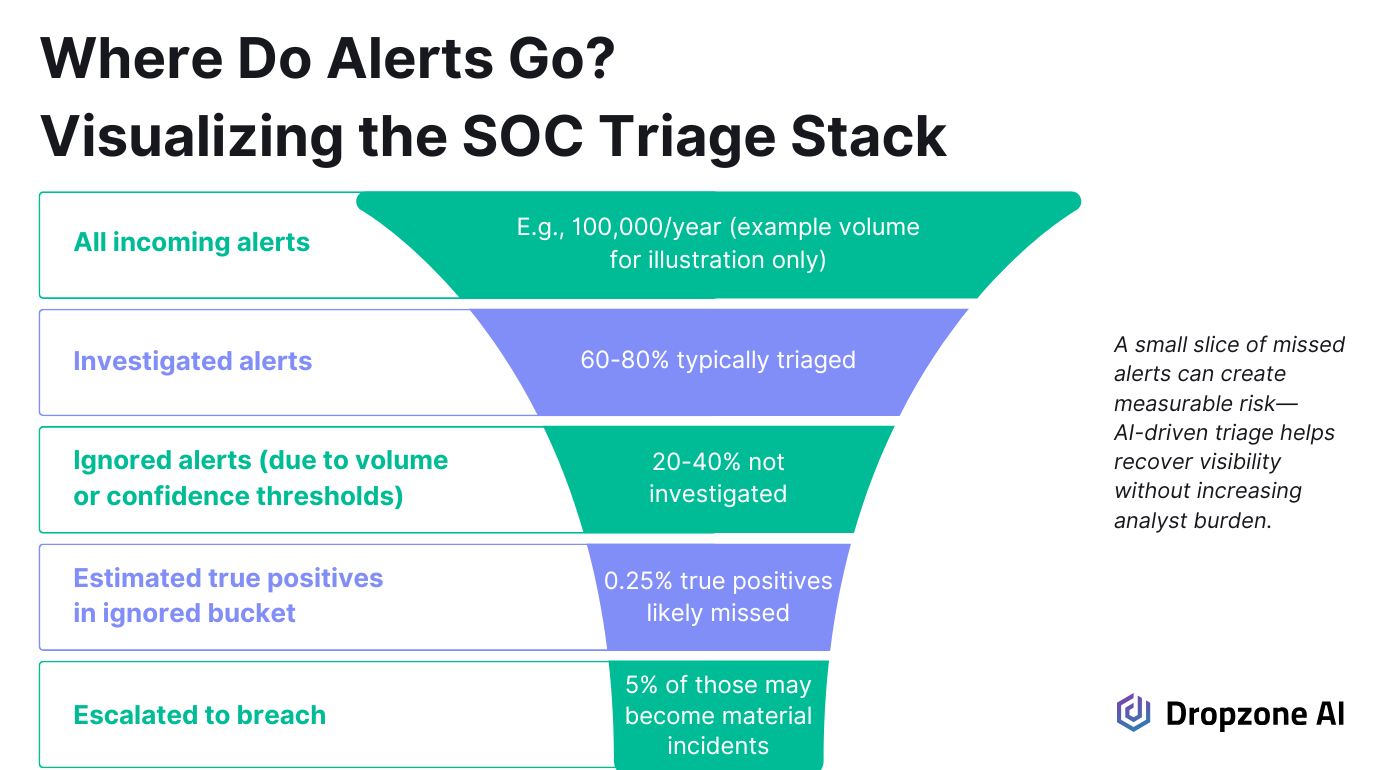

Most security teams have to make trade-offs when managing alert volume. Limited capacity means some alerts, especially low-confidence or repetitive ones, don’t get investigated. While most are likely noise, a small percentage may carry real risk.

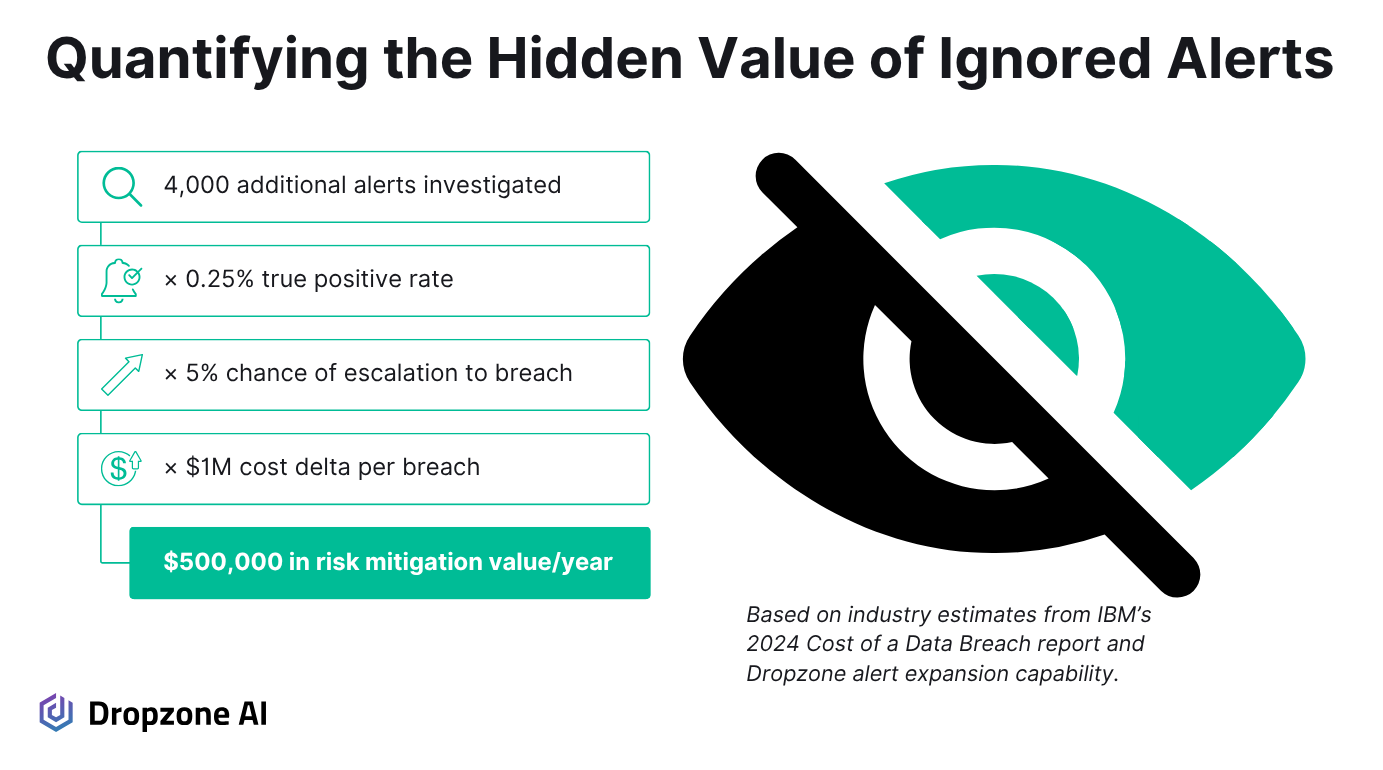

If alert coverage expands by 4,000 additional investigations per year, even a conservative estimate shows impact. Assuming 0.25% of these missed alerts are true positives, around 10 actual threats would have otherwise gone undetected. If 5% of those escalate into serious incidents, that's one significant breach likely avoided.

Industry reporting helps quantify that risk. IBM’s 2024 breach report estimates that attacker-disclosed breaches cost nearly $1 million more than those caught internally. (Attacker-disclosed means that the organization only finds out about the breach when they’re notified by the attacker.) That difference in time and cost reflects more data stolen, deleted, or ransomed; more extensive remediation; and higher reputational fallout.

Plugging those assumptions into a model: 4,000 alerts × 0.25% true positives × 5% escalation × $1 million = $500,000 in avoided breach cost. This calculation uses conservative figures and a narrow scope, making it a reliable way to start conversations around coverage and risk.

The takeaway isn’t to investigate everything; it’s to be data-driven and measure what’s left on the table in terms of risk. Use that data to shape smarter alert-handling strategies and communicate to your CFO why your security program is structured the way it is. Small gains in coverage can carry measurable financial upside.

Understanding the Nature of Ignored Alerts

Most ignored alerts come from patterns that analysts have learned to deprioritize—even if subconsciously. These include repetitive signals, low-confidence detections, and sources known to generate noise. Over time, teams build mental models for what can be safely skipped based on volume and outcomes.

That approach is often necessary to manage the workload (and possibly your mental health). But it also means the rare true positives that blend in with lower-value alerts may never be reviewed. When alert volume is high, even a minimal true-positive rate across skipped alerts can result in missed incidents.

For example, if only 0.25% of ignored alerts are legitimate threats, that may sound negligible until applied to thousands of cases. Those few incidents could represent lateral movement, early-stage compromise, or suspicious behavior that doesn't match current detection thresholds.

The goal isn't to chase every alert that would increase analyst fatigue and reduce efficiency. Instead, the objective is to improve coverage by prioritizing higher-impact gaps and low-confidence alerts with indicators suggesting further review might be worth the time.

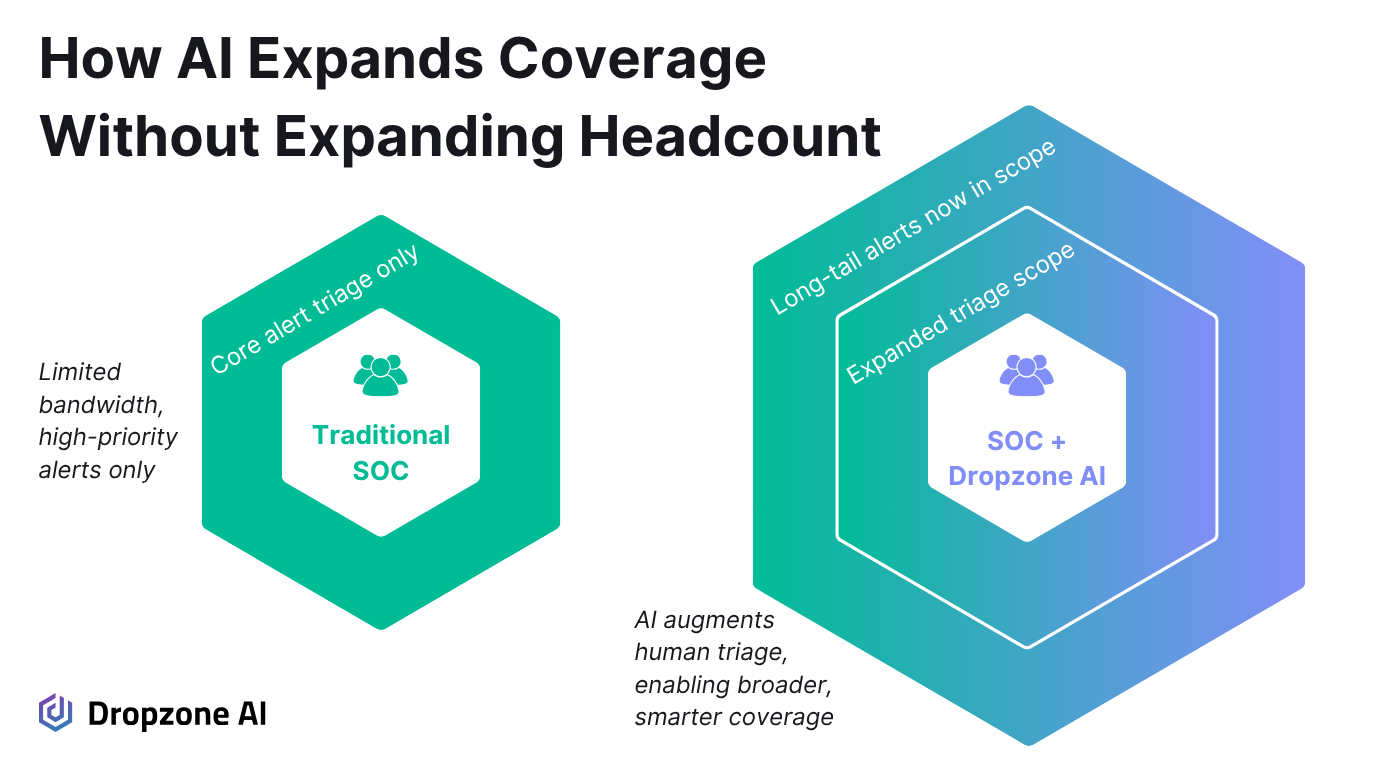

AI SOC agents can add structure to what gets extra scrutiny from a human. They can elevate long-tail signals, flag correlations between noisy alerts and known indicators, and help analysts focus on areas with greater potential risk. That lets teams increase coverage without slowing down.

Attackers Know How to Hide in Noise

Sophisticated attackers know about the Pyramid of Pain, a conceptual model for threat detection that posits hashes, IPs and domains, and other artifacts are relatively easy to detect. Detections focused on behavior—tactics, techniques, and procedures (TTPs)—are harder for attackers to avoid.

Savvy attackers will avoid easily detectable indicators of compromise (IOCs) but they must use TTPs to achieve their objectives. They know that defenders likely have some type of alerting set up to detect these TTPs. The best way that they can avoid detection is to make sure that their TTPs look like legitimate activity in the environment.

In other words, they’re hiding their actions in low-priority detections—the types of alerts that SOCs see all the time. This “living off the land” strategy means using the same utilities that sysadmins do, such as native Windows utilities like WMI and PowerShell, and hiding their command and control through DNS tunneling.

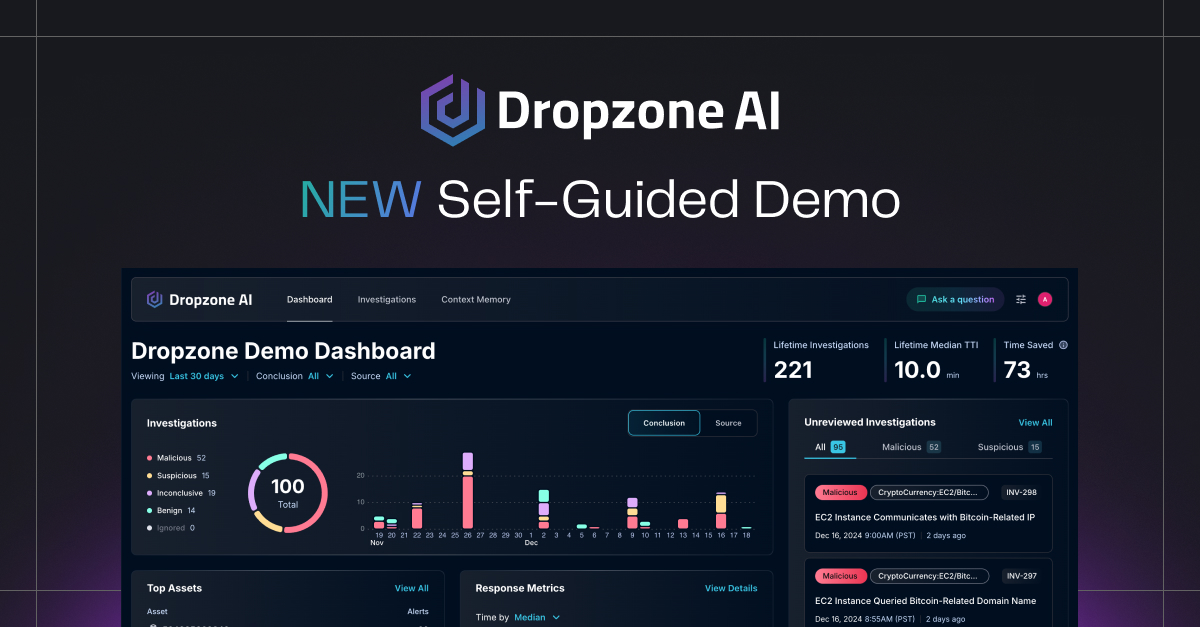

How AI SOC Agents Improve Alert Investigation Coverage

AI SOC agents like Dropzone AI allow security teams to increase alert coverage without increasing analyst headcount. Instead of painstakingly manually reviewing every alert, teams can use AI automation to prioritize and enrich alerts. That shift allows teams to expand investigation scope without overwhelming staff or slowing response times.

One of AI's most practical uses is surfacing long-tail threat alerts that don’t meet current triage thresholds but still contain a signal. These often include low-confidence or context-poor alerts that are unimportant when reviewed in isolation. But when seen in the right context or linked to other activities, they may indicate meaningful risk.

AI SOC agents can detect those patterns and bring them forward before they escalate. This helps close the gap between what’s reviewed and what matters, giving teams a broader view without sacrificing focus. It also reduces reliance on rigid alert scoring as your primary strategy for prioritizing which alerts deserve a closer look.

When AI is framed only to reduce workload, it can be hard to quantify its broader value. But the investment becomes easier to justify when positioned as a tool that reduces risk. Coverage gains have a measurable impact on risk reduction, which maps more directly to how budget holders evaluate security outcomes.

Security leaders need to show that AI helps the team cover more ground, make faster decisions, and catch what might otherwise be missed. That’s where the operational and financial benefits start to align. For another angle on risk, read our blog on how AI SOC agents like Dropzone AI reduce risk by speeding response.

Conclusion

AI can do more than accelerate triage; it can help teams expand alert coverage in a cost-effective manner. Broader visibility into the alert queue reduces the chances of missing low-signal but high-impact threats. Even modest gains in review volume can translate into significant reductions in breach risk. Simple models using conservative data can support stronger business cases for AI investment. If you're ready to see how this works for yourself, explore our self-guided demo.